In the Year at a Glance, it's easy to get lost in the title of the unit and the list of TEKS. As if they are some kind of checklist. The KEY standard that guides all of them in this unit is 4A. All of the work you do with the rest of the standards hinges on this one:

Students must look at dramatic conventions to understand, make inferences, draw conclusions, and provide text evidence about how these structures and elements enhance the text.

The key part here is that the dramatic elements have a purpose: to enhance style, tone, and mood (2A, 2D, and to some extent 1B). More specifically, the standard gives us instances that help us know what kind of dramatic conventions we might consider: monologues, soliloquies, and dramatic irony.

So our work with this standard can't really be boiled down to putting a single TEK on the board as the objective. It's more complicated and interwoven than that because we are working with author's purpose and craft and literary analysis.

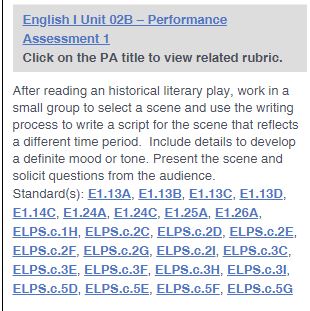

The performance assessment also has the opportunity to confound planning. If you're not careful, the way this assignment is presented can turn into a low rigor assessment that better fits the 6th grade TEK that asks student to compare how the setting influences plot. We are doing more than that here in 9th grade English.

Notice that this performance assessment asks students to recreate a scene into a different time period. We can't leave out the tone and mood part either. But what is not mentioned here is that the whole point of our drama unit is to examine how writers, directors, or actors can communicate the theme, tone, and mood through the dramatic elements.

This lesson can easily devolve into a simple retelling instead of a purposeful use of dramatic conventions. And the assessment can devolve into an activity or assignment if the teacher has not addressed the lessons about dramatic conventions during the reading phase of this process.

So if we have read The Importance of Being Ernest, we are going to have to address the dramatic elements that are present in the text. I did a little research and found several categories of dramatic conventions:

Since the standards suggest monologues and the play has several, let's just pick that one.

How does Wilde use the monologues to communicate his theme? That has to be the focus of the modeling and gradual release of the analysis as students read the scenes and selected monologues.

Applying it to writing:

When it's time to write for the assessment, the writers must first explore what theme they wish to convey. THEN they can write a scene where a monologue is used purposefully to convey the theme. Students can make decisions about what character would best deliver those lines and describe why. They can make decisions about how and why a character would compose and deliver those lines. They can lift particular lines and phrases from the monologue that best support the theme.

Students could then trade their compositions and see if the other groups or teams could discern the theme and debate why the character was or was not the best choice to deliver the message. Students can discuss the evidence that was or was not effective in delivering the author's message.

Our work here is NOT about adapting a play to a new setting. What are kids actually learning? They are learning how authors use the dramatic elements and conventions of drama to make meaning. And they are learning how to do them same for their own messages.

Note: Students in English II could read the same play, but focus on the motifs and archetypes used by Wilde to communicate the themes.